Allow traffic K3s cluster in self hosted environment

December 14, 2023

My previous post discussed some options for self host networking. Getting traffic to your host machine on your local network is only part of the problem though, as the application/website also needs to be hosted on a machine within your network. While I have previously shown a quick and easy Docker Compose solution, this post will cover a more production suitable solution for self hosting, which is to run on K3s - a lightweight Kubernetes cluster.

What is K3s?

K3s is a “certified Kubernetes distribution built for IoT & Edge computing”, providing the main features of Kubernetes in a much smaller and efficient package. The binary file of a K3s installation can be a little as 50MB depending on the version you configure, and can run on as little as 512MB RAM, so it’s perfect for a small self hosting solution such as something powered on a Raspberry Pi. While there are some limitations, it’s small footprint and superior efficiency make it a go-to solution for all sorts of other use cases.

The main Kubernetes distribution comes with a lot of bloat that isn’t installed with K3s, but there are some built in components that are installed automatically to help with getting up and running, such as SQLite, a Helm controller, a software load balancer, and an ingress controller.

Installing K3s

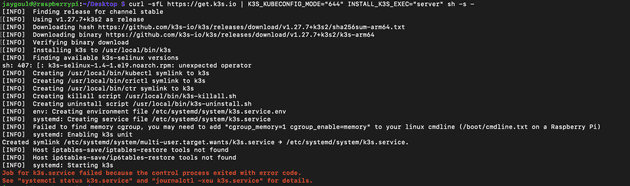

I installed with the following configuration:

curl -sfL https://get.k3s.io | K3S_KUBECONFIG_MODE="644" INSTALL_K3S_EXEC="server" sh -s -

Note that I have omitted the --flannel-backend none option to ensure that the version I install comes with all the built in components.

The K3S_KUBECONFIG_MODE option ensures that the Kubeconfig file is owned by unprivileged users on the host machine, so there is no need to run each command with sudo.

You may notice an error when installing:

This can be fixed by adding cgroup_enable=cpuset cgroup_memory=1 cgroup_enable=memory to the end of the 1 line in file /boot/cmdline.txt. The docs don’t say, but cgroup_enable=cpuset is required in order to run on 32 bit Raspberry Pi OS. A manual reboot will be required afterwards for changes to take effect.

Running kubectl commands against K3s

In order to run kubectl, helm or any other commands against K3s, you are required to use the K3s KUBECONFIG file. By default the KUBECONFIG location isn’t set to K3s, so running something like kubectl may show the following error:

Error: INSTALLATION FAILED: Kubernetes cluster unreachable: Get "http://localhost:8080/version": dial tcp [::1]:8080: connect: connection refused

To stop this, you must point the KUBECONFIG location to K3s with:

export KUBECONFIG=/etc/rancher/k3s/k3s.yamlInstalling Helm

I like to manage by Kubernetes with Helm for versioning and values options, and the same works with K3s. You can skip this and manage your K3s cluster in the same way you would manage Kubernetes with plain config files, but here are the installation commands for Helm:

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 /

chmod 700 get_helm.sh /

./get_helm.shInstalling K9s

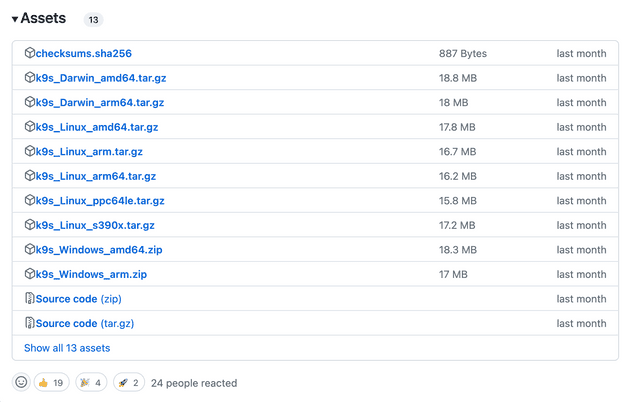

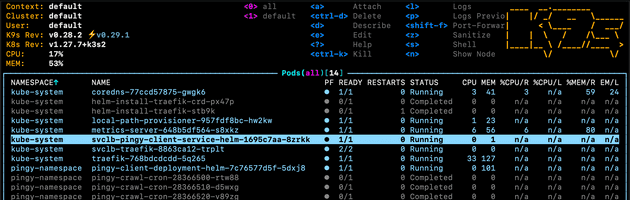

One more tool I use when managing Kubernetes is K9s. This helps visualise a Kubernetes cluster in an interactive CLI tool, which I think is much more cleaner and more effective than running standard kubectl commands.

To install I first tried with Snap, but this failed to run with the latest 64bit Raspberry PI OS. Instead I just downloaded the latest executable from k9s GitHub and ran the executable file directly on the Pi.

Once installed, just run k9s to start using. Again, be sure to run export KUBECONFIG=/etc/rancher/k3s/k3s.yaml before running k9s to ensure you are pointing to the K3s installation to run within K9s.

A side note for running Next.js apps on Raspberry Pi with K3s

I originally started using Raspberry Pi 64 bit OS, but this would produce an error with Next.js about incorrect page size:

<jemalloc>: Unsupported system page size

<jemalloc>: Unsupported system page size

memory allocation of 10 bytes failedI had to revert to using Raspberry Pi OS 32 bit instead. I don’t know if this was a Next.js issue or a k3s issue.

Exposing K3s app to the internet

Now K3s is installed, you’re able to run your app as you would with the standard K8s installation. There can be many services running in a cluster - most of which don’t need to be accessed from outside. In a simple client/server example such as a Next.js app however, we might want to expose the Next.js client to be accessed from outside the cluster.

This can be done in a few ways - port forwarding, direct connection to NodePort, and with ingress & load balancers, to name a few. These will be discussed in this post.

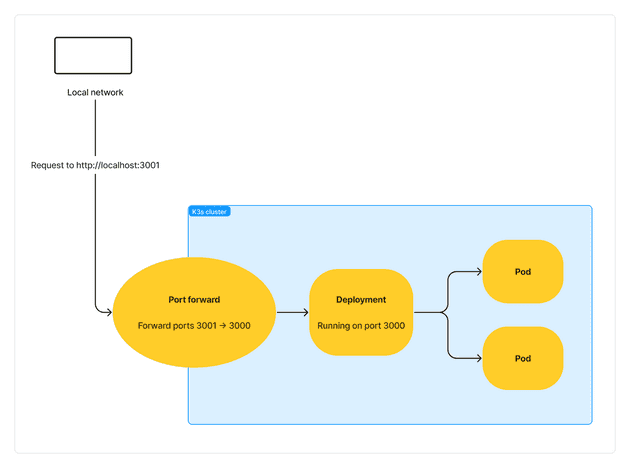

Port forwarding on K3s cluster

This allows a port to be forwarded to your host machine, allowing you to run a service within your cluster from localhost. An example command is:

kubectl --namespace pingy-namespace port-forward deployment/pingy-client-deployment-helm 3001:3000In the above command, 3001 is the port that is exposed outside of the cluster, and 3000 is the port that is being forwarded from inside the cluster. The port 3000 must be a valid port assigned to the K3s item being forwarded. In my case, the deployment called pingy-client-deployment-helm has a containerPort of 3000 which is being forwarded to outside the cluster.

We can then visit http://localhost:3001 from the host machine, or http://[host-ip]:3001 from another device on the local network. For example, accessing a service hosted on a Raspberry Pi from a MacBook on the same local network.

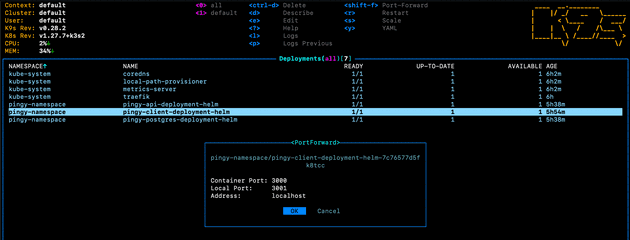

Port forwarding with kubectl is a pain because the terminal stays open and the kubectl port forward command doesn’t return. This is why I prefer to port forward with k9s, as it can be done easier as a background task:

Which can then be viewed in k9s by running :pf:

I don’t see this as a permanent solution though, and only use port forwarding for development/testing purposes.

NodePort

By default when a service is created it has the type of ClusterIP, which means the service is only accessible from within the cluster. We can specify the type NodePort, which allows the service to be accessed from outside the cluster:

apiVersion: v1

kind: Service

metadata:

name: pingy-client-nodeport-service-helm

namespace:

spec:

type: NodePort # Specify the service type

selector:

app: clientlabel

ports:

- name: http-development

port: 3031

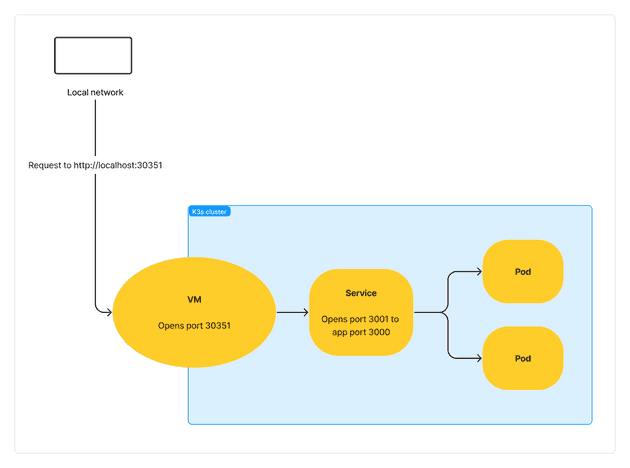

targetPort: 3000The above configuration creates a service that opens app port 3000 to service port 3031, and then Kubernetes allows traffic to port 3031 from a VM/Node which opens a node port in the range of 30000 – 32767. The node port is accessible from the host machine, so the app can be accessed using the node port with a URL such as http://localhost:30351.

Similar to port forwarding, this solution (in my opinion) is more suited for development and testing purposes.

Accessing the Traefik dashboard (optional)

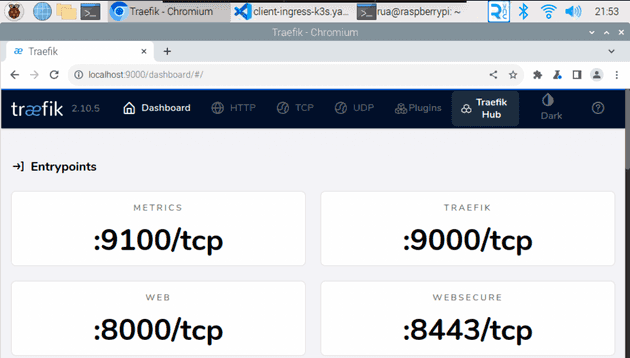

Before we route to our app, it can be useful to view the Traefik dashboard. This isn’t accessible from outside the cluster by default, but it’s easy to set up. Running the following kubectl command will set up a port forward:

kubectl port-forward -n kube-system "$(kubectl get pods -n kube-system| grep '^traefik-' | awk '{print $1}')" 9000:9000This port forward will allow us to visit http://localhost:9000/dashboard/ (don’t forget the trailing slash!), which will show us the dashboard:

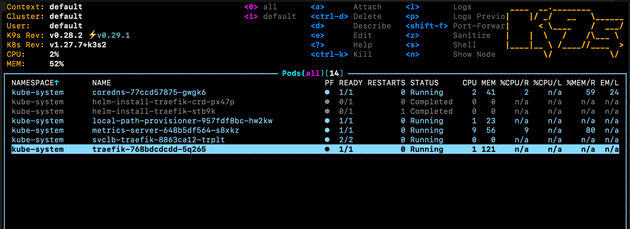

This is possible because as well as creating the ingress and load balancer with Traefik and ServiceLB respectively, K3s also creates a pod which runs various traefik applications from a traefik install, including the dashboard:

This pod has a port open for each Traefik application, including port 9000 for the dashboard. We can access with the port forward as we’re forwarding port 9000 from outside the cluster to port 9000 in the specified Traefik pod with grep '^traefik-' above.

Ingress and Load Balancers

One more type of Kubernetes service is LoadBalancer. Using a load balancer together with an ingress configuration is much more suited for a production ready solution. By default k3s has Traefik and ServiceLB installed, and while you can opt out of this during installation, I found these built in tools helpful when first experimenting with K3s.

Traefik is an ingress controller while ServiceLB is a load balancer. An ingress controller is used by an ingress configuration file, and is used to route traffic within a cluster. A load balancer provides an entry point into the cluster from the outside, providing an external IP address.

The default setup is for any ingress created in K3s to automatically use the Traefik ingress controller. For example, in k3s, an ingress such as the following would default to use the Traefik ingress controller:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pingy-client-ingress-helm

namespace: pingy-namespace

annotations:

ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- pathType: Prefix

path: "/v1"

backend:

service:

name: pingy-client-service-helm

port:

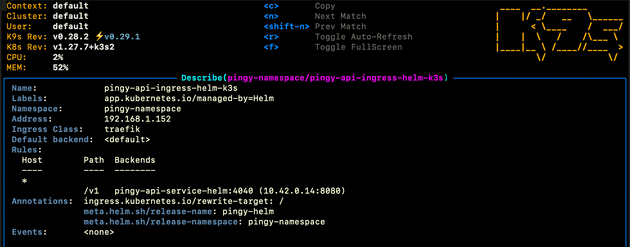

number: 3030This can be observed by describing the ingress once it is in k3s:

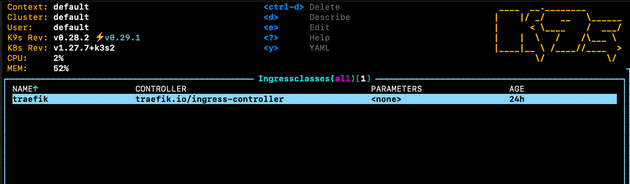

We can view the ingress class for traefik:

From here we can view the raw ingress class file, which shows that this ingress class is the default, and that the ingress class is using the traefik ingress controller (traefik.io/ingress-controller):

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

annotations:

ingressclass.kubernetes.io/is-default-class: "true"

meta.helm.sh/release-name: traefik

meta.helm.sh/release-namespace: kube-system

creationTimestamp: "2023-12-06T19:41:45Z"

generation: 1

labels:

app.kubernetes.io/instance: traefik-kube-system

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: traefik

helm.sh/chart: traefik-25.0.2_up25.0.0

name: traefik

resourceVersion: "582"

uid: 43570c07-14b7-48a3-afad-b432f14dca73

spec:

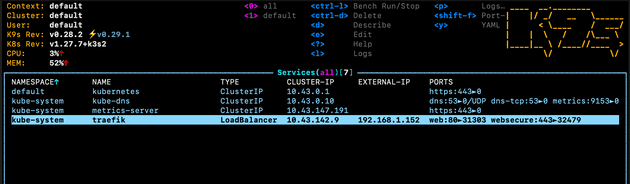

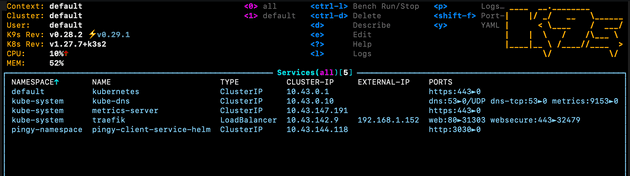

controller: traefik.io/ingress-controllerAs explained in the docs, the traefik ingress controller automatically deploys service with the type of LoadBalancer with an external IP address that can be used to access the cluster from the outside:

In a Kubernetes cloud solution provided by the likes of AWS or EKS, deploying a service with type LoadBalancer will trigger the cloud provider to create the actual load balancer for us. In the case of K3s we don’t have external/separate load balancers by default. Instead, K3s uses ServiceLB, which is a built in load balancer. It is ServiceLB which determines load balancer configuration such as what the external IP is, and the port configuration.

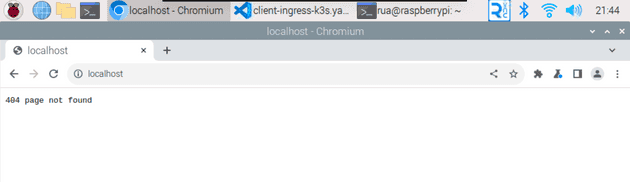

The default config is for the ServiceLB load balancer to use the external IP as the host machine’s local network IP address. It also opens the load balancer for ports 80 and 443 for standard web http access. This means that you should be able to access the cluster right off the bat by visiting http://localhost. Doing this should send you to a 404 page:

This isn’t too useful right now. We have access from outside, but the load balancer isn’t sending us anywhere useful, and an ingress is not yet configured.

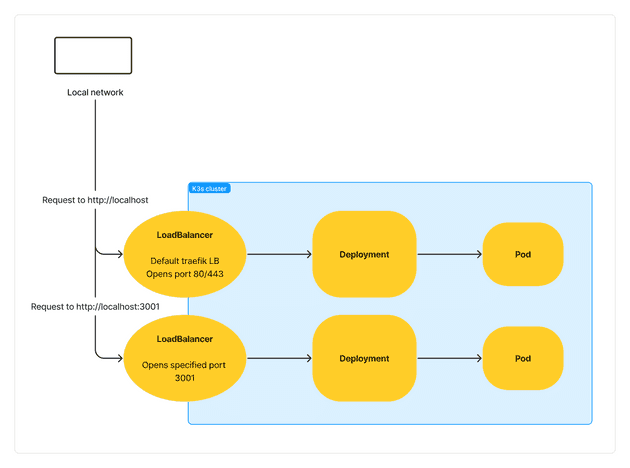

Directing traffic with additional load balancers

One option is to create a separate load balancer for each service you wish to be accessible from outside the cluster. This is done by specifying spec.type to LoadBalancer in the service we want to access:

apiVersion: v1

kind: Service

metadata:

name: pingy-client-service-helm

namespace: pingy-namespace

spec:

type: LoadBalancer # specified here

selector:

app: clientlabel

ports:

- name: http

port: 3030

targetPort: 3000Without this specification of LoadBalancer, a service will default to being of type

ClusterIP, which only makes the service accessible from within the cluster.

Also note that for a service to allow traffic to a deployment, they must have matching label selectors. More on that later in this post.

K3s is configured so that if any service is created with a LoadBalancer type, a DaemonSet is created which creates a corresponding pod on each node, with a name prefixed with svc-. This pod is then responsible for directing traffic from the load balancer IP to the specified ports in the service.

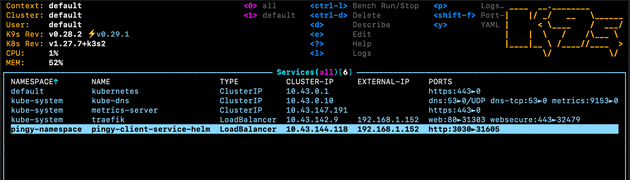

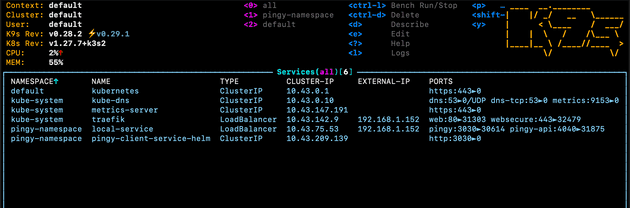

In my case for example, here are my services. This shows the default traefik load balancer (which as described earlier, opens allows traffic in from port 80 and 443), and my own pingy-client-service-helm service with load balancer type, created with the configuration file above:

And here’s the corresponding pod which is responsible for directing traffic to the service within this node:

This means that this pingy-client-service-helm LoadBalancer service allows me to visit http://localhost:3030 to see my app. With port 3030 being the port that the service is exposing, which is my case is directing traffic to my app that is running on port 3000, as shown above in the Service file.

With the K3s and Traefik setup, port 80 as an incoming port is already used by the default traefik service LoadBalancer. This means additional LoadBalancer services must be available on other ports. Additional, custom LoadBalancer services might be useful if you want to allow access to an internal part of your cluster that you don’t mind having a port specified in the URL, such as some sort of analytics or other non user facing service.

Although this solution works, it will mean creating a separate load balancer for each service we want to access from outside the cluster. This isn’t too much of a problem but there are some caveats:

- There’s no cost associated with K3s load balancers (because they are software LB’s) but a cloud provider like AWS will provision a physical load balancer, which comes with a cost. This can stack up with some clusters that have many services that require outside access.

- With K3s and Traefik specifically, any additional load balancer won’t be able to use web ports 80 and 443, so load balancer services must be accessed with different ports (i.e. accessing a service with a URL like

https://my-app.com:3230). - It can get quire messy to have a separate load balancer for every service.

One solution to address all the above is to use an ingress to direct traffic.

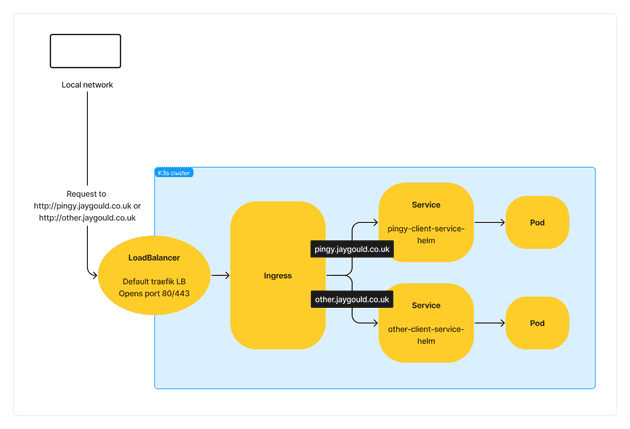

Directing traffic with ingress

Earlier I covered the default ingress setup with Traefik on k3s. We can use an ingress with Traefik ingress controller to direct traffic to a specific service from outside the cluster.

Here’s the ingress:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pingy-client-ingress-helm

namespace: pingy-namespace

annotations:

ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: pingy-client-service-helm

port:

number: 3030This ingress passes the configuration to the Traefik ingress controller. It specifies that traffic coming in with any host name should be directed to pingy-client-service-helm to port 3030. Although this ingress doesn’t create a load balancer, and the pingy-client-service-helm that it’s pointing too doesn’t specify a load balancer either (it can be a default ClusterIP), we are still directed to within the cluster because the traffic is coming in to the default load balancer that is created by Traefik (the Traefik ingress controller specifically):

The default load balancer opens ports 80 and 443, so with our above ingress we’re able to visit http://localhost and see our app, as the traffic has come in through the default load balancer, and then being redirected

Another common configuration for ingresses is to direct traffic based on host name. I have multiple separate applications on my Raspberry Pi, each app on a separate namespace comprising of multiple services. If all services are on a default namespace, one ingress config can route traffic coming in from different hosts to a corresponding app. It’s recommended to keep things organized and define namespaces though, so in this situation there can be an ingress config per namespace:

# pingy-namespace

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pingy-client-ingress-helm

namespace: pingy-namespace

annotations:

ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: pingy.jaygould.co.uk # specify incoming host

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: pingy-client-service-helm

port:

number: 3030

# other-app-namespace

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: other-app-client-ingress-helm

namespace: other-app-namespace

annotations:

ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: other.jaygould.co.uk # specify incoming host

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: other-client-service-helm

port:

number: 5115These will direct incoming traffic to our defined services based on the incoming hostname. So visiting pingy.jaygould.co.uk for example would take us to the pingy-client-service-helm service, which is configured to accept connections on port 3030. So we can now access our app’s homepage with the URL http://pingy.jaygould.co.uk, and our other app on http://other.jaygould.co.uk.

Carrying on from Cloudflare tunnels

Routing traffic based on host in an ingress config can work the same for a local Raspberry Pi as it would for any other cloud based hosting, thanks to Cloudflare Tunnels. I covered this briefly in my last post, with other possible solutions for self hosting on a Raspberry Pi such as port forwarding with DynamicDNS, but Cloudflare Tunnels provide a much more secure and tidy solution.

Given the following ingress file:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pingy-client-ingress-helm

namespace: pingy-namespace

annotations:

ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: pingy.jaygould.co.uk # specify incoming host

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: pingy-client-service-helm

port:

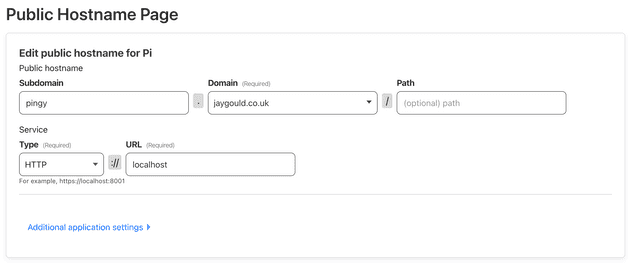

number: 3030Cloudflare Tunnels can be configured to receive requests on the hostname pingy.jaygould.co.uk, which will tunnel the traffic to my Raspberry Pi, to the Pi’s local address, http://localhost:

This is possible because setting up Cloudflare Tunnels requires installing

cloudflaredon the Pi itself, which connects to cloudflare to provide the background network config.

With traffic coming in via the tunnel to the Pi and the on to localhost, the traffic hits the default traefik LoadBalancer (which has ports open on 80 and 443 to get traffic from localhost), and pass through to the K3s ingress. As requests through Cloudflare are proxied, there is also SSL support, so visiting the full URL of https://pingy.jaygould.co.uk sends a user to the app hosted on the Raspberry Pi.

Accessing the app locally on the Pi

With our current load balancer and ingress, we can accept connections from the web through the hostname https://pingy.jaygould.co.uk, but it can be useful to access the app locally on the Pi itself. This might be helpful to debug or test things, as the Pi is essentially the “production” environment. Our K3s so far is configured to route based on hostname, but visiting pingy.jaygould.co.uk in a browser will only access our app over the internet. We want to bypass Cloudflare Tunnels to view the app directly on the host machine.

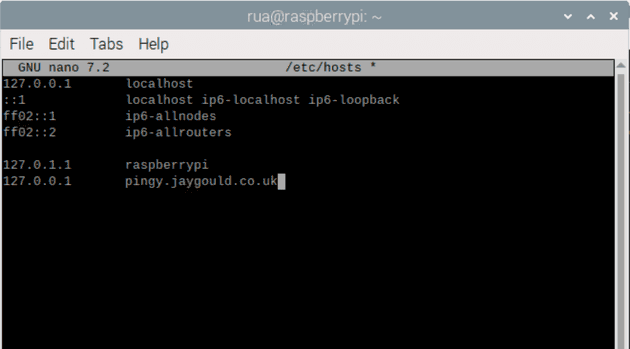

One solution is to update the hosts file to allow local visits to pingy.jaygould.co.uk to be directed to localhost:

This works a treat, but I don’t like messing with my hosts file too often, especially for things like this where the hosts file would need updating all the time to include/remove different project URLs. I prefer a solution which is more aligned with how Kubernetes works.

Another solution is to use a second load balancer. The default LoadBalancer is created automatically by Traefik, but additional LoadBalancers can be created by adding a service with the spec.type of LoadBalancer. Creating such a load balancer in K3s will assign the same IP as the host machine again, which means we can accept traffic on the same localhost host, but on different ports than the default traefik load balancer which only opens port 80 and 443. This is what a second load balancer service could look like, with different ports opened:

apiVersion: v1

kind: Service

metadata:

name: local-service

namespace: pingy-namespace

spec:

type: LoadBalancer

selector:

loadBalancer: local

ports:

- name: pingy

port: 3030

targetPort: 3000This would create the following service list in K3s:

You may be thinking, why can’t we just use an ingress config? Well ingress can’t route traffic based on ports. They direct http/https traffic only. Load balancers on the other hand can route based on ports.

The purpose of this other load balancer is solely to direct traffic from localhost, on different local ports. The end result is, for example, to visit http://localhost:3030 to visit the Pingy app (which is accessible via https://pingy.jaygould.co.uk from the web, thanks to Cloudflare Tunnels), and eventually other apps.

Be sure to add selectors and labels to link services and deployments

Another important thing to note is that the spec.selector of loadBalancer: local has been added. Selectors are needed to link config files together, such as in this case we want to link the local-service LoadBalancer service above to our deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: pingy-client-deployment-helm

namespace: pingy-namespace

spec:

replicas: 1

selector:

matchLabels:

app: clientlabel

loadBalancer: local

template:

metadata:

labels:

app: clientlabel

loadBalancer: local

spec:

imagePullSecrets:

- name: secret-gitlab

containers:

- name: pingy-client-container-helm

image: registry.gitlab.com/pingy2/pingy-client:latest

ports:

- containerPort: 3000Alternatives to default Traefik and ServiceLB with k3s

An alternative to using the default Traefik and ServiceLB is to use Nginx-ingress and MetalLB. Nginx is a web server, which is often used as a base image in a deployment when explaining how Traefik works. The nginx web server is not to be confused with the ingress-nginx (or ingress-nginx) ingress controllers, which are al alternative to Traefik. The nginx ingress controller are much more popular, but this post is aimed at covering what can be done with the default k3s config.

Senior Engineer at Haven